It’s Peer Review Week! A perfect time to post my notes from the 10th International Congress on Peer Review and Scientific Publication, which was held at the Swissôtel in Chicago, two weeks ago, September 3-5, 2025.

This was my first time attending this congress. I tried to live-post all the talks on BlueSky [except for one session where I sneaked out].

You can find most posts about this conference under the hashtag #PRC10 on BlueSky or X. Andrew Porter @retropz.bsky.social, Research Integrity and Training Adviser at the Cancer Research UK Manchester Institute, created a BlueSky feed as well.

This post will contain (lightly edited) notes from Day 1. Click here to see the posts from [Day 2] and [Day 3].

Opening and welcome session

Good morning, everyone! Live from the Swissôtel in Chicago, I will be posting from the Tenth International Congress on Peer Review and Scientific Publication. The room was empty 30 min ago, but is quickly filling up. #PRC10 peerreviewcongress.org

John Ioannidis is opening the congress by welcoming everyone online and in the room, and giving some housekeeping rules.

Then, Ana Marušić takes the floor with the Drummond Rennie Lecture ‘Forward to the Past—Making Contributors Accountable‘. How do you hold all authors (sometimes 100s or 1000s!) accountable for the contents of a paper? Authors might want to remain anonymous, group authorship, AI as an author.

AM: new problems: Fake affiliations (‘octopus affiliations’), authors without ORCID. We need better trustmarkers CRediT addresses some issues: www.elsevier.com But people are always going to lie about their contributions! jamanetwork.com

AM: We should define authorship contributions at the start of the study, not at the end. Kiemer et al: journals.plos.org We should continue doing research on authorship and move to an organizational and community culture of responsible authorship.

Author and Reviewer Use of AI

After a Q&A session, with questions from in-room and online participants, we will proceed to the first session of the day: ‘Author and Reviewer Use of AI’, featuring four presentations. AI = artificial intelligence

Isamme AlFayyad with: ‘Authors Self-disclosed Use of Artificial Intelligence in Research: Submissions to 49 BMJ Group Biomedical Journals‘. 2023: BMJ group policy on AI use by authors. Authors are asked: Was AI used for the creation of this manuscript? We studied the responses of 25k manuscripts.

IA: Only 5.7% of the authors answered yes to question about use of AI. Much lower % than found in self-reporting studies. Most used ChatGPT, and most used it to improve the quality of their writing (associated with European and Asian authors). We will test with AI-detection tools

Discussion/Q&A: * We should distinguish between AI use to improve language and AI use in the methods. * Which AI-detection tools are reliable? Most tools are not great. * Do journal editors respond differently if authors disclose the use of AI?

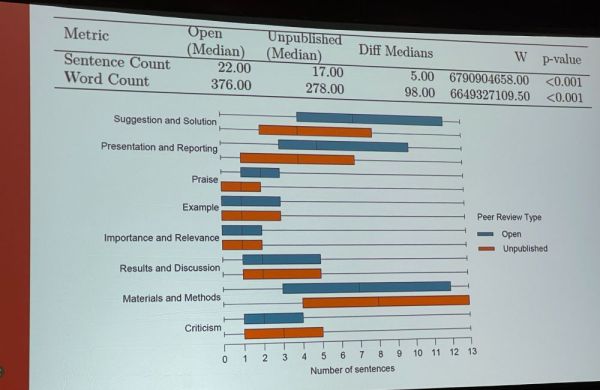

Next (program change): Mario Malički with ‘Comparison of Content in Published and Unpublished Peer Review Reports‘: We compared 140K open (Springer Nature) vs 117k (Elsevier) unpublished peer review from 233 medical journals (2016-2021, before LLMs). Language was analyzed by AI models.

Open peer reviews were longer, had some more praise, were a bit more informative, had more suggestions for improvement, and were more similar to each other. Women and reviewers with Western-affiliations wrote longer reports. Open peer review may help make reports less negative.

Discussion: * Some fields (eg. AI) have even more open processes, where reviews are conducted openly during peer review. Medical field is more hesitant (also fewer preprints!). * How about consent? Is it ethical to study reviews, in particular those that were unpublished?

Roy Perlis @royperlis.bsky.social with ‘Factors Associated With Author and Reviewer Declared Use of AI in Medical Journals‘, presenting the JAMA view. In 2023, JAMA added questions about use of AI to create/edit manuscripts – increasing 2.5 -> 4% in May 2025. jamanetwork.com

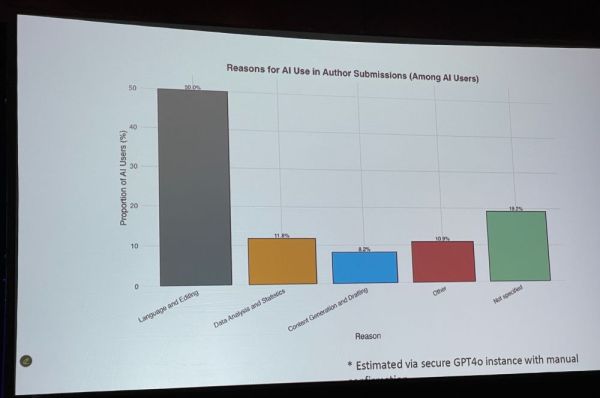

RP: Most authors used AI for language and editing. Those who used AI were over-represented in Letters to the Editor. JAMA also asks reviewers to disclose use of AI (while stating it is not allowed). This is less than 1%. Reviews that used AI had slightly better review ratings.

Discussion: * How about use of AI in revisions? * Might authors answer truthfully or give desired answer? * Correlation with industry-sponsored affiliations? * Is JAMA using AI for editorial process? * Why was % of authors reporting AI so low? – will likely increase.

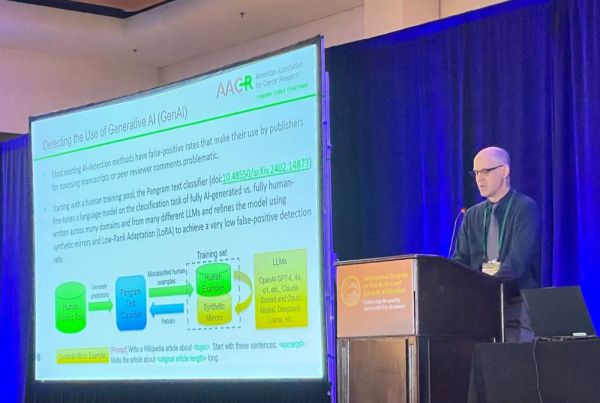

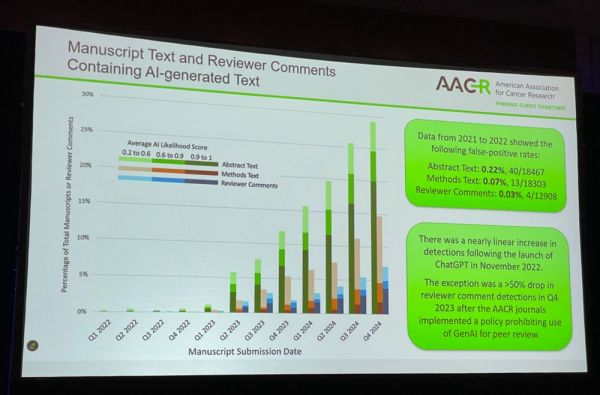

Next: Daniel Evanko with ‘Quantifying and Assessing the Use of Generative AI by Authors and Reviewers in the Cancer Research Field‘ We use Pangram text classifier arxiv.org We studied 46.5k manuscript submitted to @theaacr.bsky.social AACR journals.

DE: We detected linear increase of AI use after Nov 2022, launch of ChatGTP. Non-native English speaking authors used about 2x more AI, no clear associations with journal characteristics. AI-generated text in abstract: less likely to be sent out for peer review (confounded w/ lower quality)

DE @evanko.bsky.social: Disclosure of AI use is not reliable. Less than 25% of authors who used AI (as detected by tool) actually disclosed it. All AI-written Letters to the Editor were rejected without consideration until April 2025.

Discussion (which is cutting into the break time, with very long questions and answers) * We are increasingly use AI – is that getting more usual and acceptable? * How reliable are AI-classifiers? Pangram is very good. * Language is evolving? Or not? * Sending notifications to authors.

Authorship and Integrity Issues

After the break, we will continue with the next session: “Authorship and Integrity Issues“, introduced and moderated by Lex Bouter @lexbouter.bsky.social.

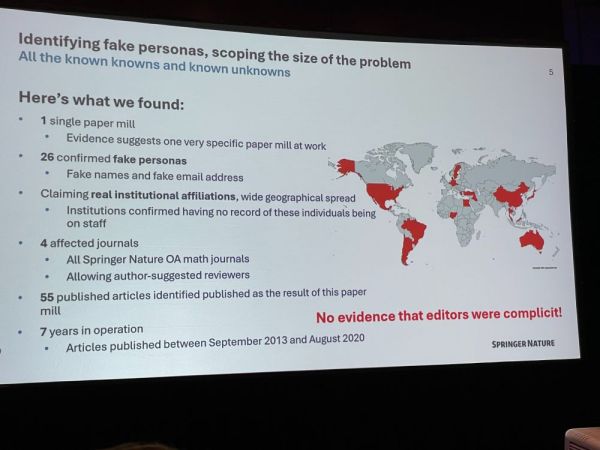

The first speaker (program change) is Tim Kersjes with ‘Paper Mill Use of Fake Personas to Manipulate the Peer Review Process‘

TK: The story started by a reader flagging two nearly identical math papers in 2 different journals. The math was wrong. Both papers were submitted at the same time. Why was this not detected during peer review? We discovered a paper mill: non-existing authors, fake reviewers.

TK: We identified 55 published articles in this paper mill, with 26 confirmed fake personas claiming real institutional affiliations with a wide geographical spread. Four journals affected (Open Access), suggested fake peer reviewers with non-institutional emails.

TK: Authors did not reply or replied that they did not care if the paper would be retracted – they just needed it to graduate.

Retaliation: we received lots of spam after retracting papers.

We learned that paper mills can abuse peer review system. Do not rely on author-suggested reviewers.

EB: I really applaud publishers, in this case@springernature.com, sharing these stories, so that we all can learn and put up safeguards.

Discussion: It is increasingly more difficult to detect paper mills.

Should we do better in checking the identity of authors and reviewers?

Discussion: * Institutional email addresses might not work, not everyone has them and ECR move to a different institution. But we do need better in verifying identity. * Should we have a list of bad actors? This is tricky (legal issues) – perhaps hold institutions accountable.

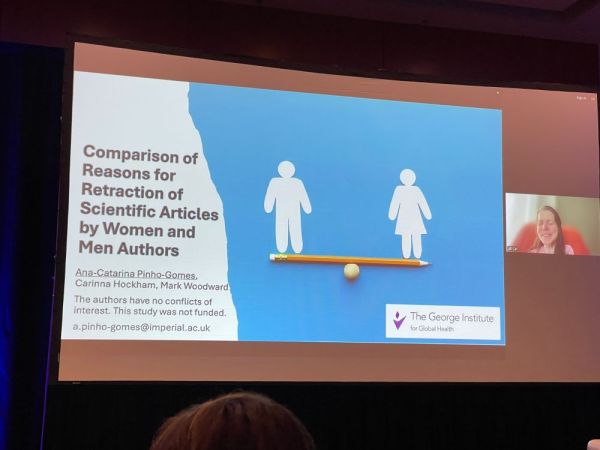

Next: Ana-Catarina Pinho-Gomes with ‘Comparison of Reasons for Retraction of Biomedical Articles by Women and Men Authors‘ (virtual presentation): We investigated gender differences in authorship of retracted papers using @retractionwatch.com database (65K retracted papers).

ACPG: Retractions for misconduct or ethical/legal reasons had an underrepresentation of women. Gender equality might enhance research integrity. Limitations: assigning gender based on names. Discussion: are women more clever, and not caught? 🙂 Paper: journals.plos.org/plosone/arti…

Next: Laura Wilson at @tandfresearch.bsky.social: ‘Authorship Changes as an Indicator of Research Integrity Concerns in Submissions to Academic Journals‘: Paper mills: authorship for sale. Are authorship changes during editorial process (after submission) an indicator for fraud?

LW: We studied all requests across 1321 TF journals to change 3 or more authors after initial submission. * 81% of authorship changes were denied. * Requests were 32x more likely to be investigated by ethics team for other concerns * Is this more likely to happen in certain journals?

Discussion: * Reasons for authorship changes? Sometimes because additional work was done after peer review. * Why not also include requests to include 1 or 2 authors? * Dropping authors during peer review is also suspicious.

(also, this room is soooo cold)

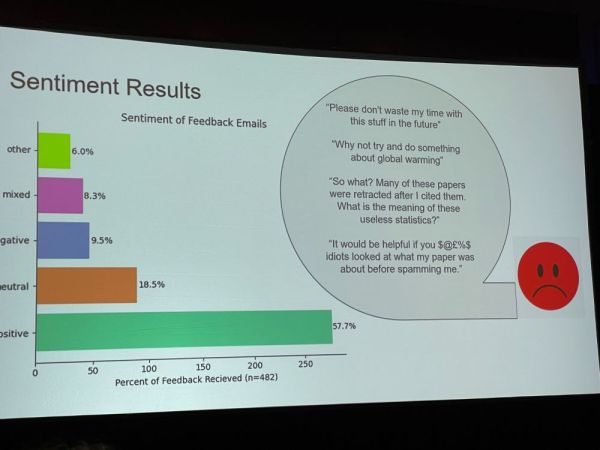

Next: Nicholas DeVito with ‘Notifying Authors That They Have Cited a Retracted Article and Future Citations of Retracted Articles: The RetractoBot Randomized Controlled Trial‘. Hypothesis: Notifying authors who cited retracted papers will decrease their citations in the future.

ND: We notified 250k authors who cited retracted papers (two people in the room got one of those emails). It did not work! After 1 year, we found no significant difference in citing those retracted papers. But it was received mostly positively although some negative replies. www.retracted.net

ND: The email system worked well, and we hope this will at least prevent some retracted papers to be cited in the future. Discussion: * Crossmark better than Retraction Watch database? * Not all citations of retracted works are out of ignorance * Better to cite retraction notice.

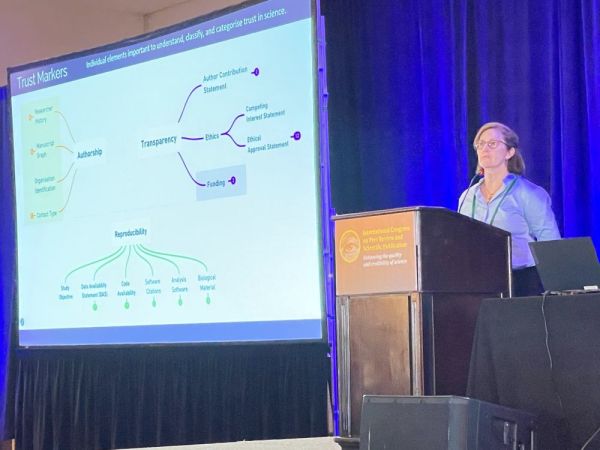

Next: Leslie McIntosh @mcintold.bsky.social with: ‘How a Questionable Research Network Manipulated Scholarly Publishing‘: Authorship and transparency are both trust markers in science. Emails/institutions/funders can be used as trust markers

LM: 232 organizations in 40 countries were affiliated with authors of a suspicious set of papers; 76 institutions were not matched to our database, such as the “Novel Global Community Educational Foundation”; many of these had addresses that were residential homes. arxiv.org/abs/2401.04022

We are now having a lunch break. Back in about 1.5 h!

Diversity and Research Environment

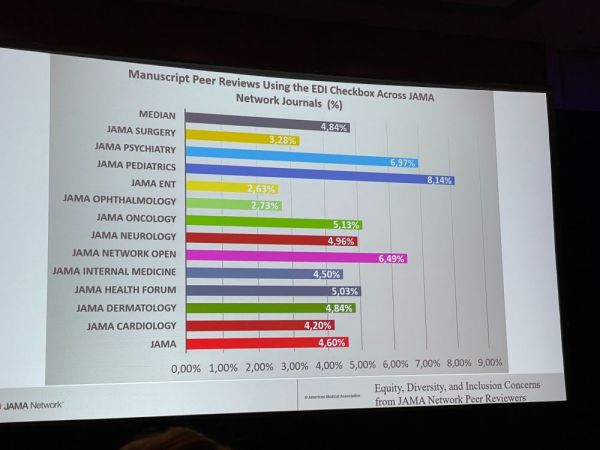

After lunch, we will continue with presentations in the session “Diversity and Research Environment”, starting with Michael Mensah @drmichaelmensah.bsky.social with “An Analysis of Equity, Diversity, and Inclusion Concerns From JAMA Network Peer Reviewers“

MM: JAMA @jama.com journals now have an EDI Checkbox, where peer reviewers can flag manuscripts for concerns about diversity and equity etc. We analyzed a set of those flagged papers, using JAMA Pediatrics as a case study (had most flagged papers). These comments are confidential.

MM: We cannot present specific cases here because of the confidentiality of the comments. But in general, some manuscripts used non-standardized questions about race identification, or they hypothesized certain results solely to race or gender, without considering other factors.

Discussion: * Should journals rely on reviewers to pick up on these issues? Should this not be the role of editors? Redundancy might be best. * JAMA has guidelines on how to report on sex, gender.

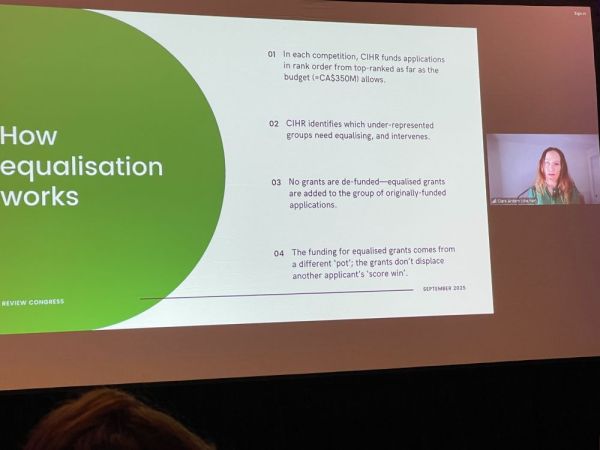

Next, a virtual presentation by Clare Ardern with ‘Assessment of an Intervention to Equalize the Proportion of Funded Grant Applications for Underrepresented Groups at the Canadian Institutes of Health Research‘ – Equalisation was introduced at CHIHR in 2016, and expanded in 2021.

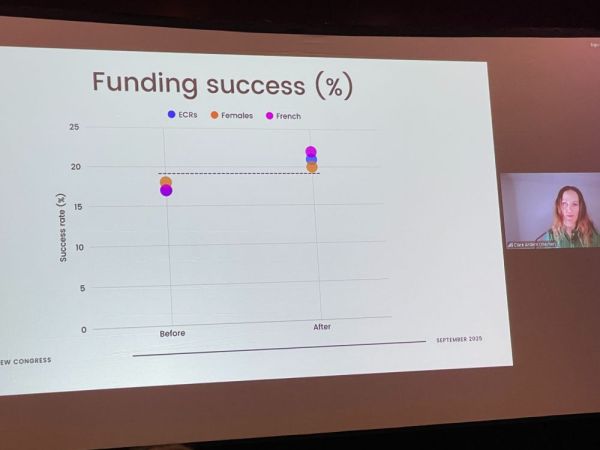

CA: We looked at Early Career Researcher and principal investigator applicants in CIHR grant competition – 60 committees with 2500 applications twice a year. The funding for equalised grants comes from a different ‘pot’ – it does not displace other winning applicants.

CA: The funding success rates for ECRs, women, and French researchers rose after the intervention. Discussion: * In US, affirmative action is no longer allowed in certain fields, how is that in Canada? * Do the number of applications match the number of PIs in each group?

Next: Noémie Aubert Bonn with: ‘Extracting Research Environment Indicators From the UK Research Excellence Framework 2021 Statements‘ REF assesses research institutions for research outputs, impact, and environment. The output % is going down, more appreciation for human factor.

NAB: Research environment considers institutional statements about unit context, people, infrastructure, collaboration. Qualitative indicators and narratives are important to add context, e.g. statements about sabbatical leave. EDI statements most on gender. (QR code, but not sharable).

NAB: Discussions ongoing to include missing voices (e.g. support staff, people who left academia) The QR code leads to this link, which for me is just a Microsoft sign in page: livemanchesterac-my.sharepoint.com/personal/noe… Perhaps @naubertbonn.bsky.social can help?

Discussion: * Self-reporting statements by universities can be misleading (‘tear-jerking’) – are these environment statements validated at all? – this is valid criticism – we are looking into better criteria.

Research Misconduct and Integrity

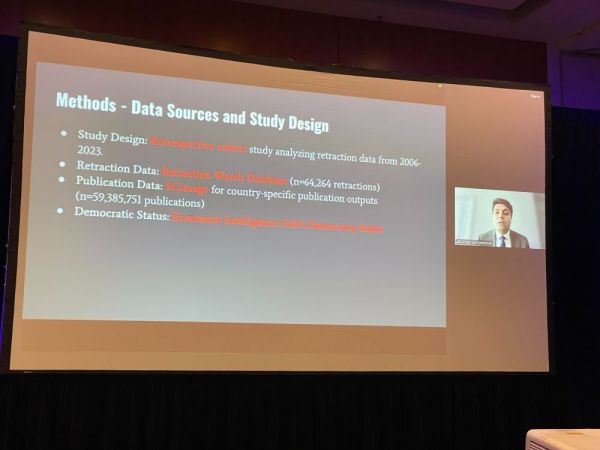

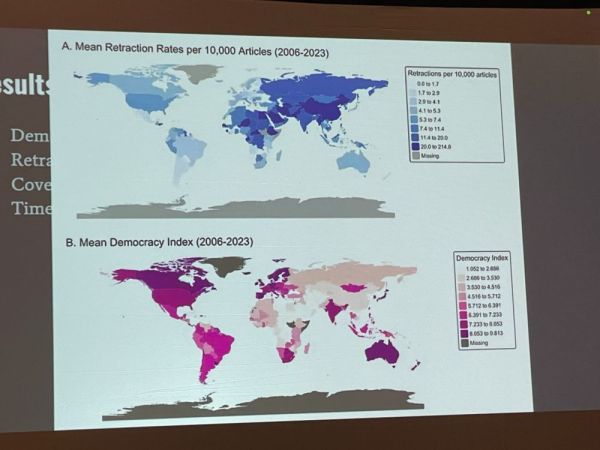

After the tea break, we continue with the “Research Misconduct and Integrity” session (Woohoo!). We start off with ‘Retractions and Democracy Index Scores Across 167 countries‘ by Ahmad Sofi-Mahmudi (online presentation): We studied relationship between democratic status and retractions – sensitive topic.

ASM: Higher democracy scores were associated with lower retraction rates (per 10,000 publications). GDP per capita was positively associated with retraction rates, suggesting better detection mechanisms in wealthier, more effectively governed countries.

ASM: Link to report: prct.xeradb.com/democracy-an… Discussion: * What led you to do this study? Wondering what drives cheating culture – system’s impact on individuals. * Many authoritarian regimes have Excellence programs, rewarding high output and thus increasing misconduct chance.

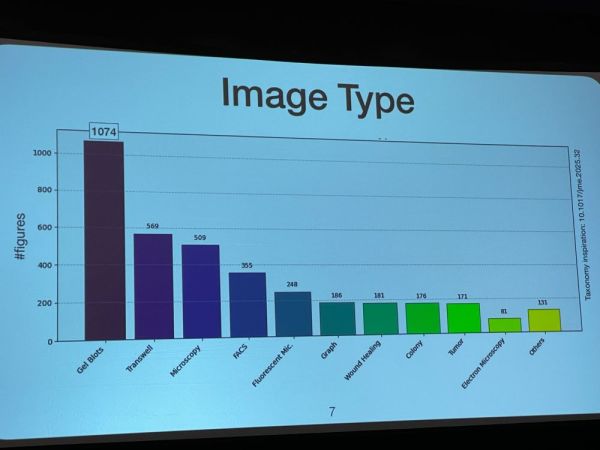

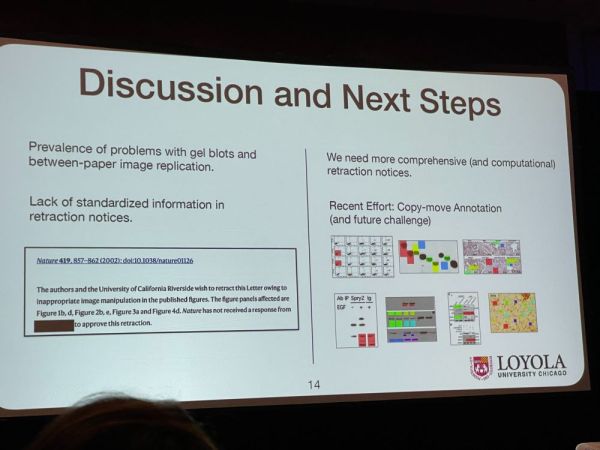

Next: Daniel Moreira with ‘Characterizing Problematic Images in Retracted Scientific Articles‘: We focus on developing software tools. We started with @retractionwatch.com data (56K entries), 8K of which involved images, and coupled them to @pubpeer.com discussions –> 2,078 articles.

DM: Most of the retractions had problematic Western blots. Taxonomy based on Paul Brookes’ work – most manipulations were replicating (reuse of images). Problems can be within figures, between figures, between documents, or paper mills (large scale, unrelated authors).

DM: Most problems fall in between-paper-replication group. We found a lack of standardized information in retraction notices. We are now developing software for copy-move annotation and challenges. See: www.nature.com/articles/s41…

EB: Here is Paul Brookes’ paper about detecting image problems and taxonomy: pmc.ncbi.nlm.nih.gov/articles/PMC…

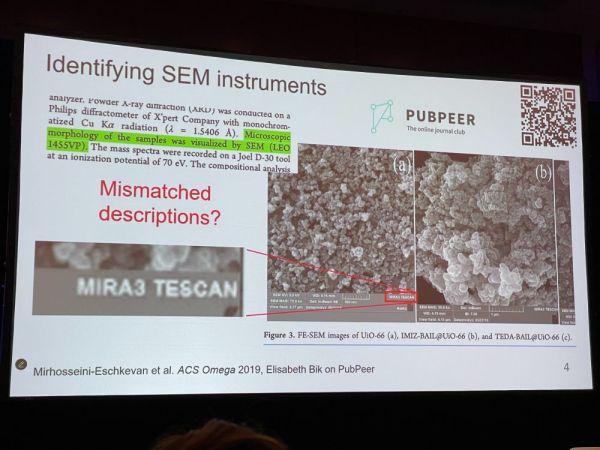

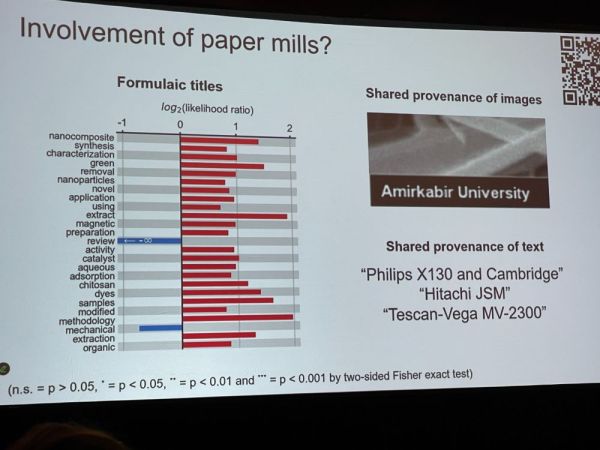

Next: Reese Richardson with ‘Misidentification of Scanning Electron Microscope Instruments in the Peer-Reviewed Materials Science and Engineering Literature‘ Paper: journals.plos.org/plosone/arti… We looked at SEM images, which usually have a metadata banner with crucial data.

RR: Some @PubPeer comments led us to analyze mismatches between instrument make in Methods description and instrument make in metadata banner. In a set of 11K articles, our automated screen found 2,400 articles with incorrectly identified SEM. Only 13 were flagged on PubPeer.

RR: @reeserichardson.bsky.social: Authors from Iran and Iraq were over-represented in this set, certain authors did this repeatedly. Several had formulaic titles without common authorship, suggestive of paper mills – involved journals frequently featured in paper mill advertisements.

Discussion: * How many papers do not have the banner? (not many, at least not in high resolution). Publishers might require banner, in high resolution! * Could a smart fraudster not cut off the banner? Absolutely! * Are there other types of data where we can apply the same analysis?

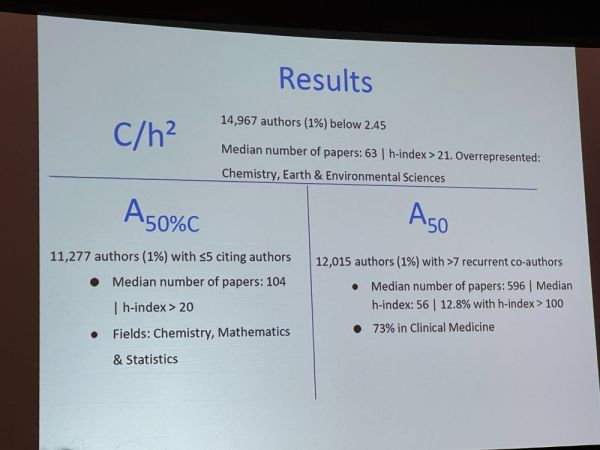

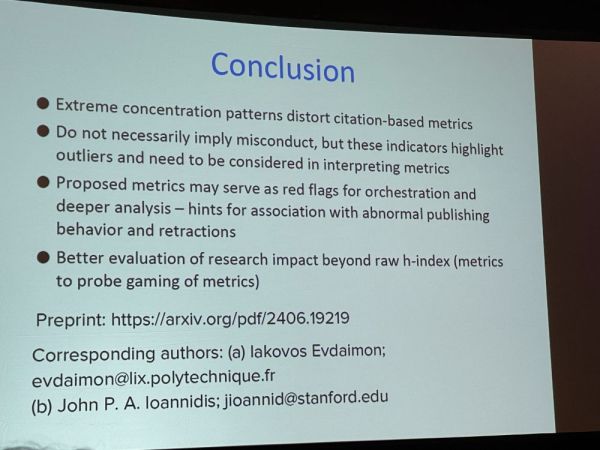

Next: John Ioannidis with: ‘Indicators of Small-Scale and Large-Scale Citation Concentration Patterns‘: H-index is a popular citation index, but can be easily gamed. Can we identify citation concentration? We looked at 1.5M authors from Scopus and focused on 1% extremes of 3 indicators.

JI: outliers in: 1: citations/h^2 2: A50%C – citing authors who cumulatively explain 50% of citations 3: A50: # co-authors with shared co-authorship exceeding 50 papers. Exclude Physics and Astronomy papers. Preprint: arxiv.org/abs/2406.19219 Paper: journals.plos.org/plosone/arti…

JI: Retractions were overrepresented, in particular in group 1. These extreme concentration patterns distort citation-based metrics. Not necessarily misconduct, but outliers that need to be considered in interpreting metrics. We need better metrics to probe gaming of metrics.

Discussion: * Do your results indicate that the problem is not so bad? Or was the threshold too stringent? * Should we intervene? * Do these indicators predict retractions? Yes, indicator 1 can. * Differences between fields? Yes, each of the 3 indicators has their own set of authors.

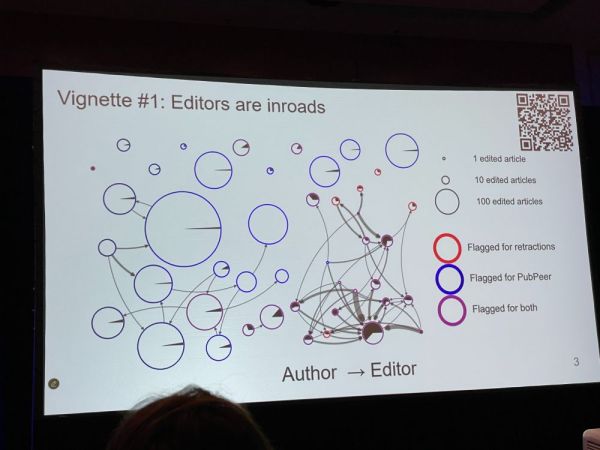

Next up: Reese Richardson @reeserichardson.bsky.social (again!) with ‘Scale and Resilience in Organizations Enabling Systematic Scientific Fraud’ – in which we tried to evaluate how large the paper mill problem is. Our dense paper: www.pnas.org/doi/abs/10.1…

RR: #1: Editors are inroads – some editors are much more associated with PubPeer-flagged or retracted papers #2: Through shared images, we found 2,000 articles that are connected to many others, suggestive for paper mills.

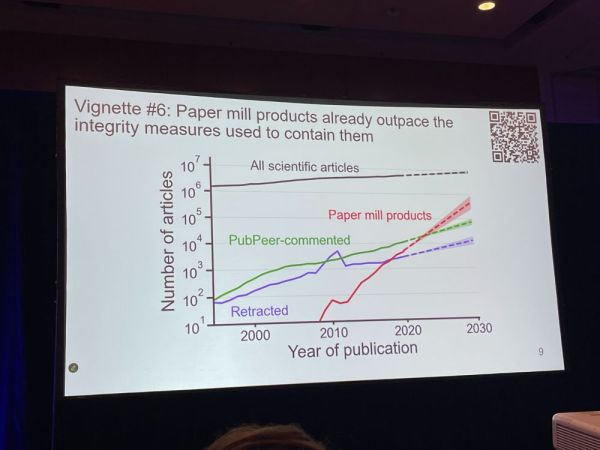

RR: #3: using the Internet archive we looked into a publication ‘broker’ that avoids and adapts to integrity measures. They replace one journal with another if they got caught. Integrity measures (deindexing a journal) are very infrequently applied. #4 skipped

RR: #6: paper mill products already outpace the integrity measures used to contain them. Widespread defection. Systemic fraud is growing and a viable object of study for metascience. We need more publication metadata from publishers. Also: plug for @COSIG – cosig.net

Discussion: * What type of data from publishers should be available for this type of research? Need to couple such tools to metadata availability! [Applause] * Retraction notice is not reliable for paper mill identification. * It took a lot of resistance and pushback to get this published.

* Should we worry about mass retractions, which are mostly about papers that might not be important? Is number of single-paper retractions going down? Should we worry more about that? – Perhaps those paper mills have a wider bad impact in terms of teaching people that misconduct is ok.

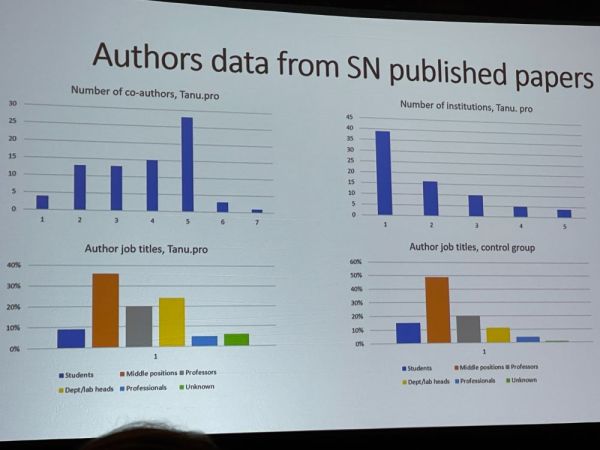

Next: ‘Patterns of Paper Mill Papers and Retraction Challenges‘ by Anna Abalkina @abalkina.bsky.social: We identified over 60 suspicious email domains associated with Tanu.pro – and identified 1,517 papers in 380 Scopus journals. Many countries – core is in Ukraine, Kazakhstan, and Russia.

AA: These papers are still being published, despite warning several publishers. Some have translated plagiarism (e.g. of bacherlor theses). Some were published through non-existing peer reviewers, e.g., “Leon Holmes”.

AA: Data from @springernature.com: 8,432 submitted papers identified as coming from Tanu.pro – most got immediately rejected, but at least 79 got published. 48 now retracted. Authors on 13 papers had the same verbatim reply. Most authors were middle-career.

AA: There are no COPE guidelines about how to handle paper mill products like these. More papers and resources about Tanu here: papermills.tilda.ws/paperstanupro Discussion: What is the extent of the problem? Hard to know. We recognize some paper mills but not all!

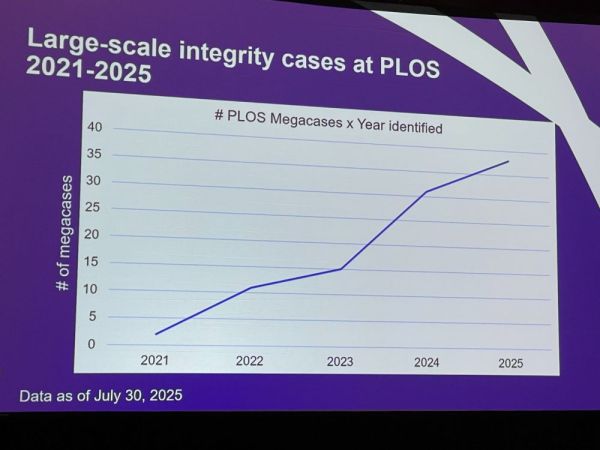

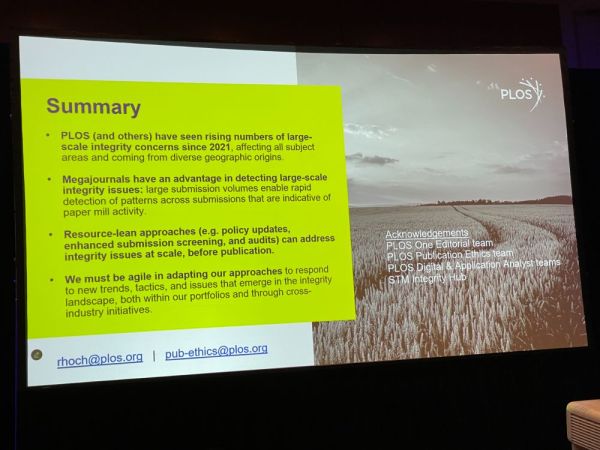

Next: Renee Hoch from @plos.org with:’Sustainable Approaches to Upholding High Integrity Standards in the Face of Large-Scale Threats: Insights From PLOS One‘: @plosone.org has adaptable system-wide screening to screen for integrity issues on a large scale.

RH: There has been an increasing number of integrity cases over the past years. Highest representation from India, Pakistan, and China, but also multi-national. We screen e.g. for duplicate submissions, human research ethics approval, and paper mill signals.

RH: These integrity interventions have resulted in increased desk reject rate – Preventing misconduct to get published is less work than retracting later. We must be agile in adapting our approaches to respond to new trends, but megajournals have an advantage in detecting those.

This ends today’s scientific session. On to the Opening Reception!

Click here to go to Peer Review Congress Chicago – [Day 2] and [Day 3].