There has been a lot of excitement – and even a presidential tweet about a recent paper from the lab of Didier Raoult, an infectious disease specialist in Marseille, France. But although this study might offer a glimmer of hope, there are some serious problems with the paper too.

The paper, by Gautret et al., first appeared on 16 March 2020 as a preprint on medRxiv. A “preprint” is a raw version of a scientific paper that has not yet been reviewed by external scientists. Because the COVID-19 pandemic is so severe and treatment options are so limited, it makes sense that the authors chose to publish this small study as soon as possible. Peer review usually takes weeks or months, so it was good that they shared it as soon as the first results came in.

On the same day as the preprint appeared, 16 March, the manuscript was submitted to the International Journal of Antimicrobial Agents, where it was accepted within a day, on 17 March, and published online on 20 March. That suggests that peer review was done in 24h, an incredibly fast time.

A summary of the study

In the study, a total of 42 COVID-19 infected people admitted to hospitals in Marseille, Nice, Avignon and Briançon (all in South France) were enrolled. Of these, 16 patients received the normal care (“controls”), while 26 other patients were treated with Hydroxychloroquine (HQ), a drug normally used to treat lupus and reumatoid arthritis, which is related to Chloroquine, a drug used to treat or prevent malaria infections.

Of the 26 HQ treated patients, 20 completed the study. Of these, 6 also received Azithromycin (AZ), an antibiotic. During the 6 day study, patients were sampled for the presence or absence of the COVID-19 virus (PCR positivity). On day 6, most of the 16 control patients, about half of the 14 HQ treated patients, and none of the 6 HQ+AZ treated patients were PCR positive. The conclusion was that HQ treatment, but especially HQ+AZ treatment is very effective in treating COVID-19 infections.

This study gained an enormous amount of attention because it reports very encouraging results of a small study in which COVID-19 patients were treated with hydroxychloroquine. This is great news in the midst of a pandemic outbreak for a viral disease for which there is not good current treatment, which is why this paper was quickly published.

Unfortunately, there are many potential problems with the way the data and the peer review process were handled. The discussion is still ongoing on PubPeer, with 2 posts on either the preprint (40 comments as of today) or the published version (3 comments). Let’s take a closer look at the paper and discuss some possible problems. Note that part of the text in this blog post has been previously posted by me on Pubpeer and on Twitter.

Ethical issues

In this study, people who had COVID-19, a coronavirus infection, were treated with a drug designed to kill the malaria parasite. Studies like these, where people are being treated with a drug that is approved to treat a different disease, need to be approved by ethical and drug safety committees before they take place.

The protocol for the treatment was approved by the French National Agency for Drug Safety on March 5th 2020. It was approved by the French Ethic Committee on March 6th 2020. The paper states that patients were followed up until day 14. The paper was submitted for publication on March 16th. See screenshot.

But, how does a 14-day study fit between March 6th and March 16th? Could the authors have started the study before ethical approval was obtained? Something does not seem quite right.

Strangely, although the text states that the patients were followed-up for 14 days, the figures and tables only show data for 6 days, so maybe the timeline is actually OK. But it is not clearly formulated in the Methods.

A change of plans

In the EU Clinical Trial Register page, the study was described as evaluating PCR data on Day 1, Day 4, Day 7 and Day 14. However, the study show the data for Day 6, which is different than planned. Why did the authors not show the results on Day 7? Did the data on day 7 not look as good?

Confounding factors

In an ideal clinical trial study, the control group and treatment group should be as similar as possible. Patients should be of similar age and gender ratio, be equally sick at the start of treatment, and analyzed in the same way. The only difference between the 2 groups should be whether the patients received treatment or not.

Here, however, the control and treatment group differ in various other ways from each other. Scientists call those confounding factors, factors other than the treatment that are different between the controls and treated patients, and that might explain the different outcomes.

The patients were recruited at different “centers”, but it is not very clear which patient was located in which hospital. The HQ treated patients were all in Marseille, while the controls were located in Marseille or other centers. One can imagine that hospitals might differ in treatment plans, ward layouts, availability of staff, disinfection routines, etc. It is not clear if controls and treated patients were all recruited and treated at the same hospital? This should be added to Table S1.

The control and treated patients were also not randomized, as is usual in a trial like this. Some patients were chosen to be treated, while other patients were chosen to serve as controls. That might bring along all kinds of biases, in which e.g. researchers might be tempted to favor e.g. sicker patients, or patients that have been sick longer to be chosen in one of the different groups. Unfortunately, the lead author of the paper, Didier Raoult, does not believe in randomized clinical trials.

Then, the patients in the control group included some very young patients (ages 10-20), while the two treatment groups only had patients 25 years and older. See the lime green box in Table S1 above. This is strange, because the paper also states that patients could not be included if they were 12 years or younger. Control patients 1-3 do not fulfill that criteria.

Of particular note, control patients 6 and 8-16 appear to have been analyzed differently. Their Day 0 PCR values are not given as CT values (the number of cycles after which a PCR becomes positive, the lower the number, the more virus is present) but as POS/NEG, suggesting a different test was used. Highlighted with red boxes in the Table S1 screenshot above.

Update: Another possible confounding factor is that the patients who did not want to receive the HQ treatment or who “had an exclusion criteria”, such as a medical condition that would put them at risk for treatment with the drug, were assigned to the control group. This might mean that they were even more differences between the treatment and control arms of the study.

Six missing patients

Although the study started with 26 patients in the HQ or HQ+AZ group, data from only 20 treated patients are given, because not all patients completed the 6-day study. The data for these 20 patients looks incredibly nice; especially the patients who were given both medications all recovered very fast.

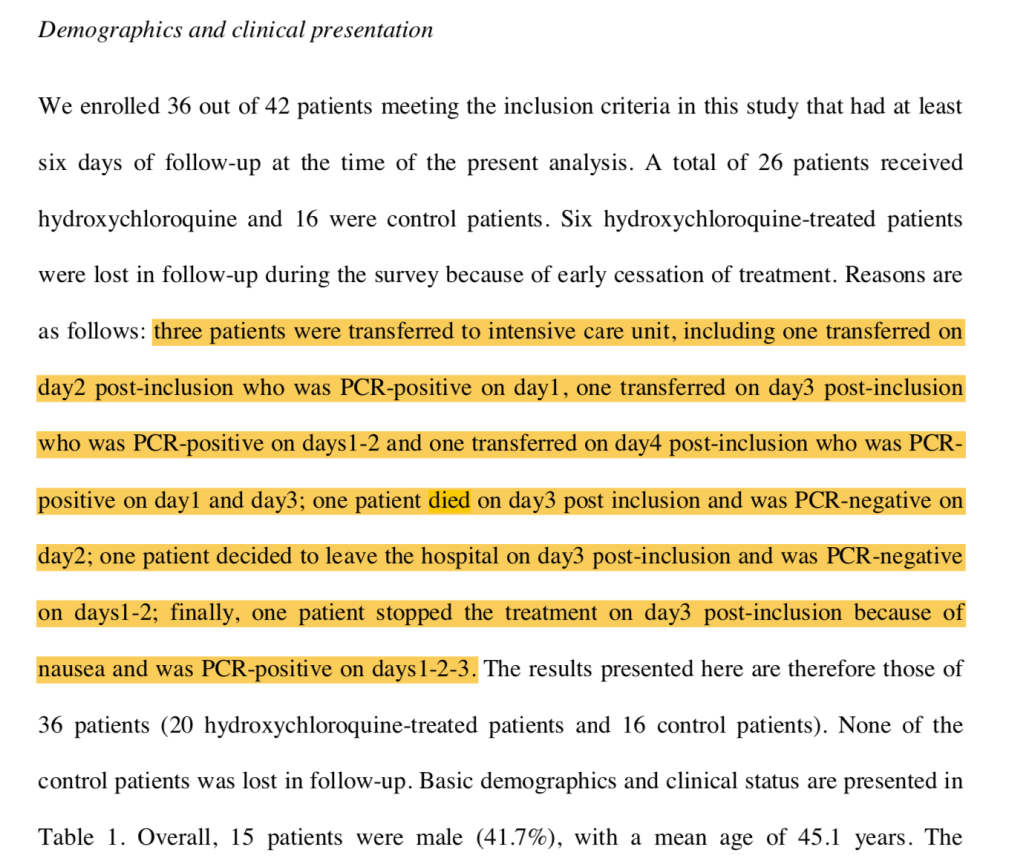

What happened to the other six treated patients? Why did they drop out of the study? Three of them were transferred to the intensive care unit (presumably because they got sicker) and 1 died. The other two patients were either too nauseous and stopped the medication, or left the hospital (which might be a sign they felt much better). See this screenshot:

So 4 of the 26 treated patients were actually not recovering at all. It seems a bit strange to leave these 4 patients who got worse or who died out of the study, just on the basis that they stopped taking the medication (which is pretty difficult once the patient is dead). As several people wrote sarcastically on Twitter: My results always look amazing if I leave out the patients who died, or the experiments that did not work.

Problematic PCRs and outcomes

As you can see on the Table S1 screenshot above, patients’ PCR results were a bit variable. Patients 5, 23, and 31 in particular show days in which their PCR is negative, followed by days in which their PCR is positive again (dark blue boxes). This might be because the PCR were done using a throat swab, and the virus might be just around the detection limit in the throat, leading to variable results from day to day.

Several patients in the control group did not even have a PCR result on Day 6, so it is not clear how they were counted in the Day 6 result. E.g. look at patients 11 and 12, who were positive on Day 4, but not tested on Day 5 or 6. How sure are the authors that these patients did not convert to PCR negativity?

It would have been better if the authors would use clinical improvement (e.g. fever, lung function) as the outcome, not a throat PCR. The virus could still be rampantly present in the lungs, and the patient could still be very sick, while the virus is already cleared out of the throat. If PCR is an outcome, it would be better measured as e.g. at least 2 or three consecutive days of PCR negativity.

Troubled peer review process

The paper was submitted on 16 March and accepted, presumably after peer review, on 17 March. Assuming the paper was indeed peer reviewed within 24h, that seems incredibly fast. But there might be a reason why this process was so fast.

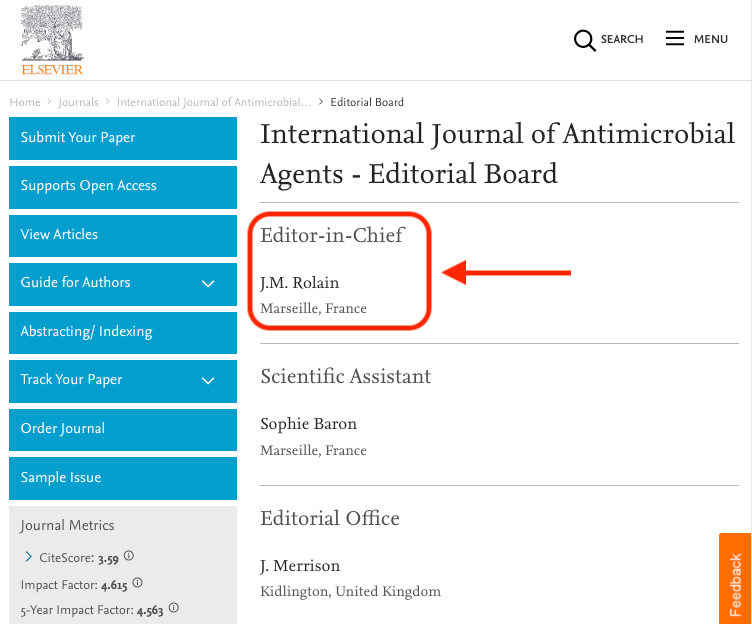

One of the authors on this paper, JM Rolain, is also the Editor in Chief of the journal in which the paper was accepted, i.e. the International Journal of Antimicrobial Agents. This might be perceived as a huge conflict of interest, in particular in combination with the peer review process of less than 24h.

This would be the equivalent of allowing a student to grade their own paper. Low and behold, the student got an A+!

The journal should make the peer reviews (usually reports from 2 or 3 other scientists who are not authors on the paper or work at the same institutes) publicly available, to prove that a thorough peer-review process indeed took place.

Obviously, in the case of a viral pandemic there is a great need for new results to become available as fast as possible. It is understandable that the scientific peer review process might be a bit less polished in this case than for other science papers.

However, the preprint was already publicly available for all, so it does not make sense that this peer review was done in such a rushed manner. It would have been better if the raw data were shared by preprint, with a more carefully written and analyzed, peer-reviewed version being published a couple of weeks later.

Other posts about this paper

Here are some Twitter and blogs post written by others that have raised similar or additional concerns about the Gautret et al. paper. I will update this list, so leave comments below if you have seen a good one.

- Oliver Hulme et al. did a reanalysis of the data adding the 4 dropped out HQ patients back in and found the HQ treatment group is still significantly lower in PCR detection. See their preprint here: https://osf.io/96bce/

- Gaetan Burgio: https://twitter.com/GaetanBurgio/status/1241201751916568576

- Leonid Schneider: Chloroquine genius Didier Raoult to save the world from COVID-19

- Krutika Kuppalli: https://twitter.com/KrutikaKuppalli/status/1241457057976315904

- Nick Brown: https://twitter.com/sTeamTraen/status/1241330562830422017

- Andrew Lover: https://twitter.com/AndrewALover/status/1241735899546890242

- Podcast with C Drosten (in German): https://www.ndr.de/nachrichten/info/17-Coronavirus-Update-Malaria-Medikament-vorerst-kein-Hoffnungstraeger,podcastcoronavirus144.htm

- Matthew Herper for STAT news: https://www.statnews.com/2020/03/22/why-trump-at-odds-with-medical-experts-over-malaria-drugs-against-covid-19/

Any notion of what Raoult’s argument is about randomized trials? I tried watching the video but the most I could grok is he thinks they’re unethical somehow, but I don’t follow how he is proposing to then do science.

LikeLike

He is not doing science. He is doing medecine to the best of his knowledge. That is his argument against clinical trials in this specific situation of the covid-19. Other than that, he is not generally against trials and he follows the rules. The emergency of the current situation made him feel like it was not the time for pondering but rather acting. According to his interviews, he applie war medecine to relieve the health care centers. As any good doctor would do. I’m not saying he is right about the HQ, but he is certainly right in trying to save lives with molecules he and thousands of physicians know, instead of wasting time “doing science”.

LikeLiked by 1 person

Not war medecine, but what they call tropical medecine, which is in fact colonial medecine in its french form

LikeLike

Hello,

This analysis is well done, as it’s a very poor paper with plenty of conflicts of interests. The French context goes beyond the article. Too many non-scientists, mainly politicians, give opinions on radios and televisions. A well-known politician from Nice (Estrosi) took chloroquine for his coronavirus. He was cured in a few days without hospitalization. He gave interviews to explain that chloroquine was effective… he is not the only politician with a media opinion on this treatment. Politicians and pseudo-science journalists comment on D Raoult’s excellence, based only on the number of publications. In March 2020, D Raoult co-signed 5 papers in the International Journal of Antimicrobiol Agents…..

There are too many fights in France about this publication, and the message that it is bad is not heard by the media.

I suggest to read the 2012 D Raoult portrait in Science entitled ‘Sound and fury in the microbiology lab’ https://science.sciencemag.org/content/335/6072/1033.long

Bonne journée

Hervé Maisonneuve, MD, Paris

LikeLike

A rigorous review

https://www.nature.com/articles/s41584-020-0372-x

Review Article

Published: 07 February 2020

Mechanisms of action of hydroxychloroquine and chloroquine: implications for rheumatology

Eva Schrezenmeier & Thomas Dörner

Nature Reviews Rheumatology volume 16, pages155–166(2020)

Abstract of the paper

Despite widespread clinical use of antimalarial drugs such as hydroxychloroquine and chloroquine in the treatment of rheumatoid arthritis (RA), systemic lupus erythematosus (SLE) and other inflammatory rheumatic diseases, insights into the mechanism of action of these drugs are still emerging. Hydroxychloroquine and chloroquine are weak bases and have a characteristic ‘deep’ volume of distribution and a half-life of around 50 days. These drugs interfere with lysosomal activity and autophagy, interact with membrane stability and alter signalling pathways and transcriptional activity, which can result in inhibition of cytokine production and modulation of certain co-stimulatory molecules. These modes of action, together with the drug’s chemical properties, might explain the clinical efficacy and well-known adverse effects (such as retinopathy) of these drugs. The unknown dose–response relationships of these drugs and the lack of definitions of the minimum dose needed for clinical efficacy and what doses are toxic pose challenges to clinical practice. Further challenges include patient non-adherence and possible context-dependent variations in blood drug levels. Available mechanistic data give insights into the immunomodulatory potency of hydroxychloroquine and provide the rationale to search for more potent and/or selective inhibitors.

LikeLike

“The antimalarial drugs hydroxychloroquine and chloroquine are DMARDs introduced serendipitously and empirically for the treatment of various rheumatic diseases (Fig. 1)” These lines are from the Nature review paper above

Th above Nature paper is no way related to COVID-19. However, did this word “serendipitously” used in the above paper prompt the French study? The paper by the French study in IJAA, there is no reference to above Nature review paper which was published on 7th Feb 2020 online , a month before the trials were conducted for COVID 19.

LikeLike

“26 other patients were treated with Hydroxychloroquine (HQ), a drug that is normally used to treat malaria infections”

P. falciparum has been resistant to chloroquinine since the ’80s. Artemisinim-based cocktails have filled the void for now.

LikeLike

^ Um, “chloroquine,” sorry. Mutatis mutandis hydroxychloroquine.

LikeLike

TWENTY got better! ONE died.

I’ll take those odds and so would anyone else that’s staring into the reality of dying. It’s inexpensive yo manufacture. There is ample supply. The risks are well known and quite manageable. Tgere is zero “science” to show that this therapy has been the cause of a single death. Like it or not, physicians everywhere are using it BECAUSE IT WORKS! Why would any of you stand in the way of saving lives waiting for empirical scientific proof–knowing it could be years, decades, maybe NEVER to satisfy your scientific minds.

As for the science and scientists… “Knock yourselves out but, whatever you do–please–do not put your vanity ahead of saving lives. While you guys fight to be published and receive the accolades of your peers, and funding for your work–people die.”

Everyone will fare much better if you’ll just apply a little wisdom… KISS!

LikeLike

yes,u r right.

jknana.com

LikeLike

#supportElisabethBik I am with you. Your work is hard but is essential for improving practices in science. Don’t let them intimidate you

LikeLiked by 1 person

I am supporting Elizabeth Bik, this complaint demonstrates only one thing: the pettiness of the litigators, who don’t want to scrutinized and challenged.

LikeLike

… conflict of interest? ..because there’s so much money to be made in contradicting Pharma marketing? Since hydroxychloroquine works best when administered early, doesn’t it make sense that some patients may have deteriorated because they didn’t get treatment soon enough?

LikeLike

.

(for the English translation see below..)

Geachte Mevrouw Bik,

In uw stuk vind ik vooral veel zoeken naar spijkers op laag water.

(Als pars pro toto), het feit aanklagen dat een van de mede-ondertekenaars van het onderzoek ook een medisch tijdschrift uitgeeft, is qua belangen-verstrengeling nu niet echt het summum.

Verder geeft U ook nergens elementen à décharge, door bijvoorbeeld een overzicht te geven van àndere studies, of door te vergelijken met àndere studies.., uit het verleden..

Het Zelenko-protocol is één van die mogelijke vergelijkings-punten.

Graag zou ik (en ànderen) ook weten, in hoeverre er ook Zink-zouten en Vitamine-D (en-C) werden toegediend.

Soms verbergt de ene trein namelijk de àndere en is een redelijk onschuldig geneesmiddel als “Hydroy-Chloroquine”, gewoon een alibi, (deels placebo) om ook Zinken Vitamine-D (en-C) toe te dienen, die niet als geneesmiddelen aanzien worden, maar als “voedings-supplementen”, en die toch een zeer belangrijke rol kunnen spelen in het genezings-proces.

Het toedienen van “Azytromycine”, is niet zozeer om virussen te remmen, maar het lijkt me eerder toegewezen om de mogelijke en frequente bijkomende bacteriële long-infecties tegen te gaan, (een virus komt zelden alleen..)

Tenslotte vraag ik me af of U even kritisch met de fijne kam doorheen de klinische trial-studies van “Remdesivir” gaat en met een even fijne kam door de studies en trials van de mRNA en ‘S1’ spike inoculatie-producten..

Vriendelijke groet,

Edward Wouters,

Brussel

.

Dear Ms Bik,

In your piece I find a lot of sought comment on trifles.

(As pars pro toto), denouncing the fact that one of the study’s co-signers also publishes a medical journal is not really the pinnacle of conflict of interest.

Furthermore, you do not provide any positive elements (pro bono), for example by providing an overview of other studies, or by comparing with other studies .., from the past ..

The Zelenko protocol is one of those possible points of comparison.

I (and others) would also like to know, to what extent Zinc salts and Vitamin D (and C) were also administered.

Sometimes one train hides the other and a fairly harmless drug like “Hydroy-Chloroquine”, just an alibi, (part placebo) to also administer Zinc, Vitamin-D (and-C), which are not considered drugs , but as “nutritional supplements”, and which can still play a very important role in the healing process.

The administration of “Azythromycin” is not so much to inhibit viruses, but it seems to me rather assigned to counter the possible and frequent additional bacterial lung infections, (a virus rarely comes alone..)

Finally, I wonder if you’re going to be as critical as you go through the clinical trial studies of “Remdesivir” with a fine-toothed comb and with an equally fine-toothed comb through the studies and trials of the mRNA and ‘S1’ spike inoculation products.

Yours Sincerely,

Edward Wouters,

Brussels

.

LikeLike